Dr. Jamie McClave Baldwin

In an article enumerating the dos and do nots of statistical significance published in The American Statistician, Dr. Ronald Wasserstein et al. said the following:

“We summarize our recommendations in two sentences totaling seven words: ‘Accept uncertainty. Be thoughtful, open, and modest.’”

Seems like simple advice — applicable to anything and something most people would agree with. So what’s all the fuss and why does this need to be stated by the uppermost authorities in the statistical world?

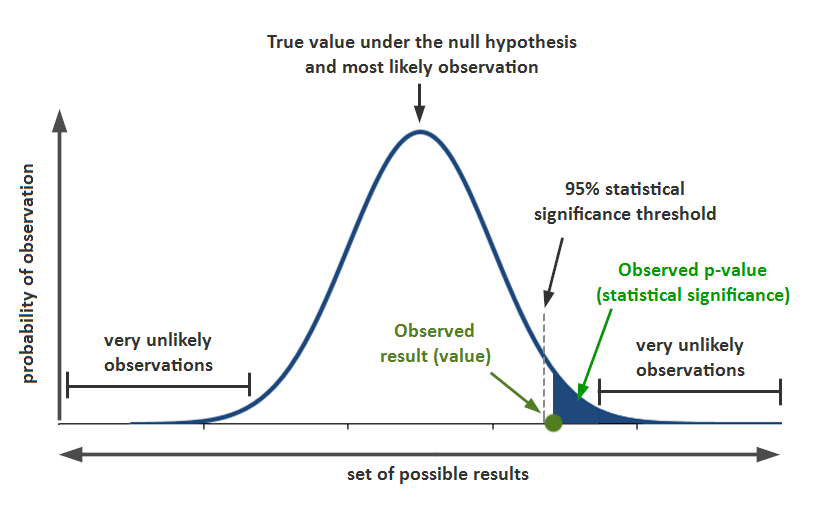

The heart of that debate goes like this. Many academic journals have long required a study to show statistical significance, associated with a low p-value (typically less than .05) in the results to qualify for inclusion in the journal. This seemed reasonable on the surface — a study needed to show some sort of important effect to be included in the body of knowledge for that field. Unfortunately, this led to abuse. Authors would “p-hack” and manipulate results to get statistical significance in order to get published. So now these academic journals face a conundrum.

If they drop the statistical significance requirement, do they run the risk of letting in junk science and irrelevant material? Or if they continue the requirement, are they encouraging bad scientific practice, potential false positives, and a myriad of other scientific problems? The answer suggested by the statistics community, and practiced by Infotech since its inception, is that those choices fall into the fallacy of the false dilemma. Neither extreme is right and those extremes are not the only choices. Context is essential; honesty is crucial; and integrity is everything. The statistician is not just a person pressing a magic button that produces mysterious results that only he or she can unlock. Statistics is a toolbox and the statistician is the handywoman.

If they drop the statistical significance requirement, do they run the risk of letting in junk science and irrelevant material? Or if they continue the requirement, are they encouraging bad scientific practice, potential false positives, and a myriad of other scientific problems? The answer suggested by the statistics community, and practiced by Infotech since its inception, is that those choices fall into the fallacy of the false dilemma. Neither extreme is right and those extremes are not the only choices. Context is essential; honesty is crucial; and integrity is everything. The statistician is not just a person pressing a magic button that produces mysterious results that only he or she can unlock. Statistics is a toolbox and the statistician is the handywoman.

Wasserstein’s ultimate advice is right: accept uncertainty. The p-value may shed light on the amount of uncertainty, but it does not eliminate it full stop.

Throwing out p-values as a whole is inappropriate and would disregard hundreds of years of statistical theory. Recognizing that p-values have limitations and must be considered in context – how large is the sample, are the results also practically significant, do other tests confirm the results – is also a necessary part of science. Studying new and improved tools for evaluating hypotheses has its place as well. In the end, if the effect doesn’t reach statistical significance, that may still provide direction for future research or different avenues to travel. It may tell you that you have another statistical problem, such as multicollinearity, too little information, confounded effects, omitted variables. Or it may tell you that there is no relationship between the variables of interest. No news is neither good news or bad news; but it is news.